Code

|

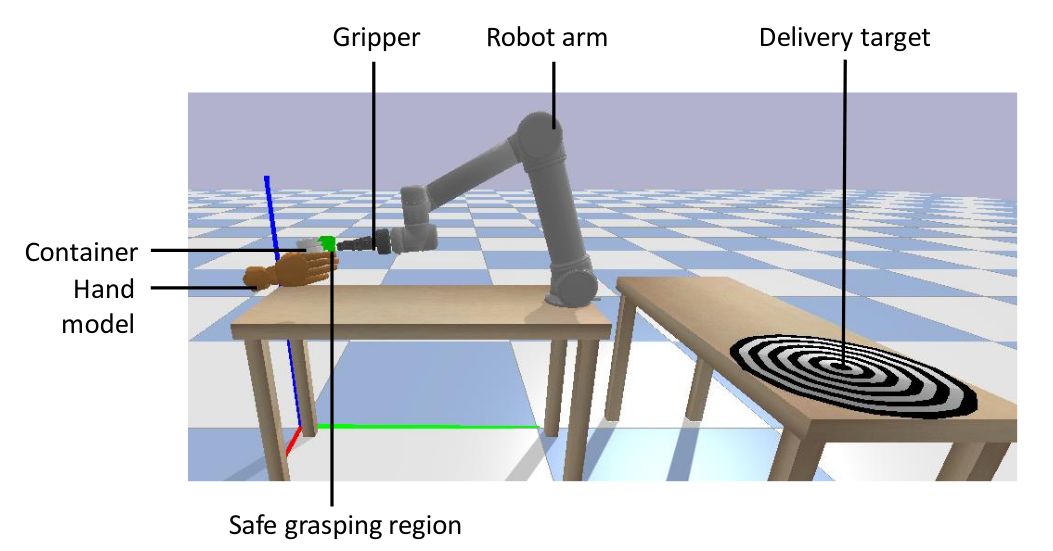

Human-to-Robot Handovers of Unseen Containers with Unknown Filling Baseline for a human-to-robot handovers of an unseen containers. [code] [details] |

|

Towards safe human-to-robot handovers of unknown containers Software of the real-to-simulation framework to conduct safe human-to-robot handovers with visual estimations of the physical properties of unknown cups or drinking glasses and of the human hands from videos of a person manipulating the object. [code] [details] |

|

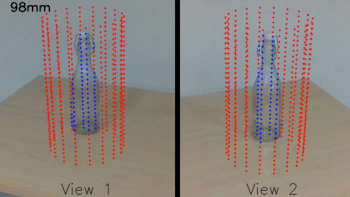

LoDE: Localisation and Object Dimensions Estimator Software of the method that jointly localises container-like objects and estimates their dimensions with a generative 3D sampling model and a multi-view 3D-2D iterative shape fitting, using two wide-baseline, calibrated RGB cameras. [code] [details] |

|

WHC: Whole-Body Control with QP Generic whole body control library with QP: inverse dynamics and kinematics. [code] |

|

Carefulness detection Dynamical Systems that can classify new, unknown handovers, as Careful Objects (comparable to handling a cup full of water) or Not-Careful Objects (comparable to handling an empty cup). [code] |

|

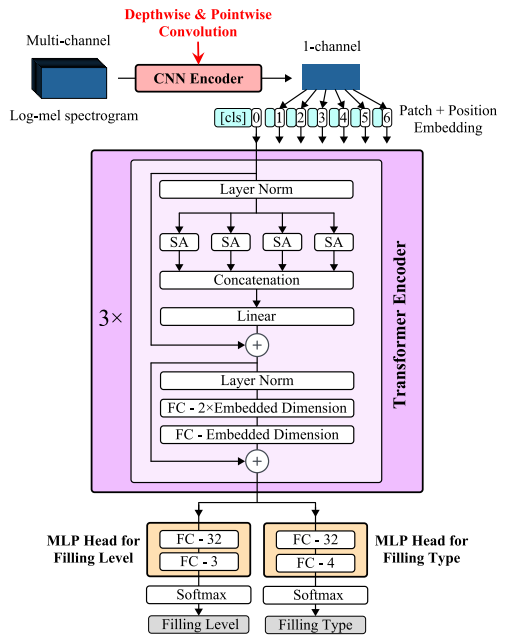

ACC: Audio Content Classification Software of the method that identifies the action performed by a person manipulating a food box or drinking glass (shaking or pouring) and then jointly classifies the content type and level witihin the container, using audio data converted into spectrograms. [code] [details] |

|

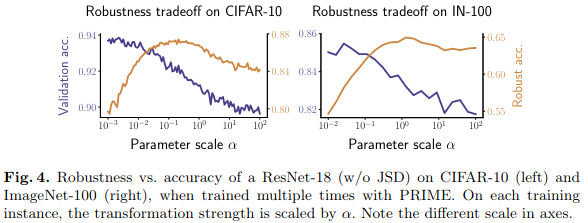

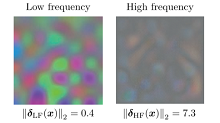

PRIME: A few primitives can boost robustness to common corruptions Software of PRIME, a generic, plug-n-play data augmentation scheme that consists of simple families of max-entropy image transformations for conferring robustness against common corruptions. [code] [paper] |

|

NADs: Neural Anisotropy Directions Software that reproduces the results of Neural Anisotropy Directions: A sequence of vectors that encapsulate the directional inductive bias of an architecture and encode its preference to separate the input data based on some particular features. [code] [paper] [details] |

|

Hold me tight! Software that reproduce the results and influence of discriminative features on deep network boundaries. [code] [paper] |

|

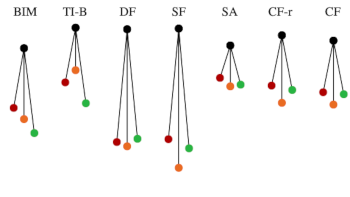

ColorFool: Semantic Adversarial Colorization Software that identifies image regions using a semantic segmentation model and generates adversarial images via perturbing color of semantic regions in the natural color range. [code] [paper] [details] |

|

Challenge Evaluation Toolkit Official evaluation toolkit for the CORSMAL Challenge. [code] [details] |

|

|

Toolkit for the CHOC dataset Official toolkit with utilities for the CORSMAL Hand-Occluded Containers dataset. [code] [details] [dataset] |

|

Toolkit for rendering composite images Official toolkit to automatically render composite images of handheld containers (synthetic objects, hands and forearms) over real backgrounds using Blender and Python. [code] [details] [dataset] |

|

CHOC-NOCS for 6D object pose estimation with hand occlusions Software to run the multi-branch convolutional neural network, adapted from NOCS and re-trained in QMUL, for the task of category-level 6D pose estimation on images of hand-occluded containers. [code] [details] [dataset] |

Software from people using project data and models

|

Improving Generalization of Deep Networks for Estimating Physical Properties of Containers and Fillings Software of the solution submitted by the team Squids at the 2022 ICASSP CORSMAL challenge. [code] [paper] |

|

Shared Transformer Encoder with Mask-based 3D Model Estimation for Container Mass Estimation Software of the solution submitted by the team KEIO-ICS at the 2022 ICASSP CORSMAL challenge. [code] |

|

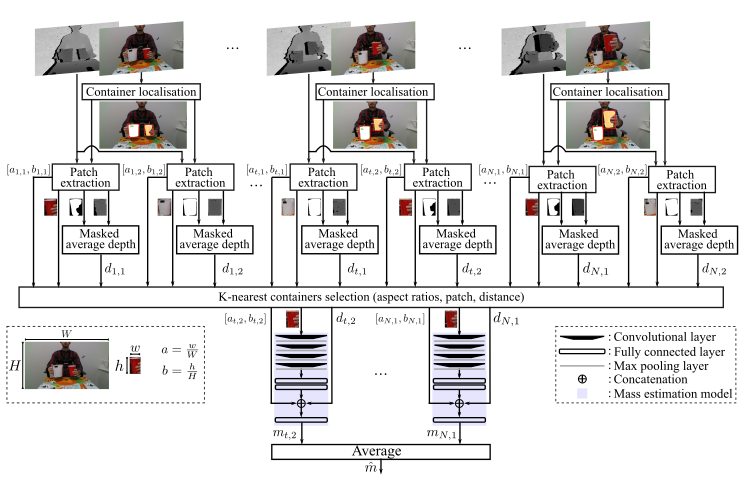

Container localisation and mass estimation with an RGB-D camera Software of the solution submitted by the team Visual at the 2022 ICASSP CORSMAL challenge. [code] [paper] |

|

|

Filling mass estimation using multi-modal observations of human-robot handovers Software of the solution submitted by the Because It's Tactile team at the 2020 CORSMAL challenge. [code] [paper] [video] [slides] |

|

|

Audio-Visual Hybrid Approach for Filling Mass Estimation Software of the solution submitted by the HVRL team at the 2020 CORSMAL challenge. [code] [paper] [video] [slides] |

|

NTNU-ERC solution for filling mass estimation Software of the solution submitted by the NTNU-ERC team at the 2020 CORSMAL challenge. [code] [slides] |

Other software from the CORSMAL team and related to the project

|

|

A unified framework for coordinated multi-arm motion planning S. S. Mirrazavi Salehian, N. Figueroa, A. Billard The International Journal of Robotics Research, Vol. 37, Issue 10, pp. 1205-1232, April 2018. Libraries for a unified framework designed to perform a coordinated multi-arm motion planning: a centralised inverse kinematics solver under self-collision avoidance. [code] [paper] |

|

|

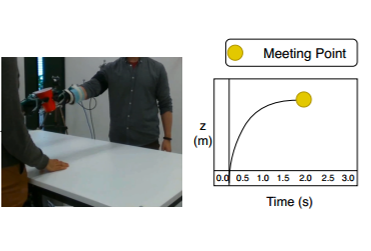

A human-inspired controller for fluid human-robot handovers J. Medina, F. Duvallet, M. Karnam, A. Billard Proc. of Int. Conference on Humanoid Robots, Cancun, Mexico, 15-17 November 2016. ROS package for estimating the load share of an object while being supported by a robot and a third party (such as a person). [code] [paper] |

|

|

Kuka-lwr-ros ROS package to control the KUKA LWR 4 (both simulation and physical robot). [code] |

|

|

A long short-term memory convolutional neural network for first-person vision activity recognition G. Abebe, A. Cavallaro Proc. of ICCV workshop on Assistive Computer Vision and Robotics (ACVR), Venice, October 28, 2017. Software for first person vision activities. [code] [paper] |

|

|

Inertial-Vision: cross-domain knowledge transfer for wearable sensors G. Abebe, A. Cavallaro Proc. of ICCV workshop on Assistive Computer Vision and Robotics (ACVR), Venice, October 28, 2017. Software for classifying the activities from first-person videos using cross-domain knowledge transfer for wearable sensors. [code] [paper] |

|

|

Active visual tracking in multi-agent scenarios Y. Wang, A. Cavallaro Proc. of IEEE Int. Conference on Advanced Signal and Video based Surveillance (AVSS), Lecce, 29 August - 1 September, 2017. Software for active visual target tracking in multi-agent scenarios. [code] [paper] |